最近开始学习凸优化,虽然作为数学系的学生曾上过数值优化课,但当时学得是“稀里糊涂”(in boss’s words)。当然以前也没有认识到数值优化的应用,现转为初级码农,从业ML,遂得知优化之实用(当然主要是每年paper无数),重头学习凸优化。目前正在看Yurii Nesterov所编的教材的Introductory Lectures on Convex Optimization: A Basic Course,为免看完即忘,因此作了笔记。

What’s in this book

- General optimization problems (Unfortunately “unsolvable”)

- Smooth convex optimization

- Nonsmooth convex optimization

- Convex minimization problems with explicit structure

PS: Goals of this course (from Introduction section):

- Show that: despite their attraction, the proposed “solutions” of general optimization problems very often cannot satisfy the expectations of a naive user. The main face, which should be known to any person dealing with optimization models, is that in general optimization problems are unsolvable. (The reality is always cruel)

- Discussing methods for solvable nonlinear models, namely convex optimization problems.

Chapter 1 – Nonlinear Optimization

General formulation of the problem

Let $x \in \mathbb{R}^n, S \subset \mathbb{R}^n$ and $f_0(x), \ldots, f_m(x)$ be real-valued functions of $x$. We deal with different variants of the following general minimization problem:

$$

\min f_0(x), ~ \text{s.t. } f_j(x) \ \& \ 0, j = 1, \ldots, m; ~ x \in S.

$$

where the $\&$ can be $\leq, \geq$ or $=$.

“In general, optimization problems are unsolvable”

Performance of numerical methods

Usually, the numerical methods are developed for solving many different problems with similar characteristics. Thus, a performance of method $\mathcal{M}$ on the whole class $\mathcal{F}$ (of problems) is a natural characteristics of its efficiency.

Some concepts:

- $\Sigma$: A known (for numerical scheme) “part” of problem $\mathcal{P}$ is called the model of the problem

- $\mathcal{O}$: The process of collecting the data is called oracle

- Zero-order oracle: return function value $f(x)$

- First-order oracle: return $f(x)$ and gradient $f’(x)$

- Second-order oracle: return $f(x), f’(x)$ and Hessian $f’’(x)$

- $\mathcal{F}$: Problem class $\mathcal{F} \equiv (\Sigma, \mathcal{O}, \mathcal{T}_\epsilon)$, $\mathcal{T}_\epsilon$ is stopping criterion. And $\mathcal{M}$ method’s performance on problem class is its performance on the worst $\mathcal{P}_w$ from $\mathcal{F}$.

- Mesure of complexity of problem $\mathcal{P}$ for method $\mathcal{M}$ (As finding an exact solution is always impossible for numerical optimization, to solve a problem means to find an approximate solution with an accuracy $\epsilon > 0$.)

- Analytical complexity: number of calls of oracle, which is required to solve problem $\mathcal{P}$ up to accuracy $\epsilon$

- Arithmetical complexity: The total number of arithmetic operations (including the work of oracle and work of method), which is required to solve problem $\mathcal{P}$ up to accuracy $\epsilon$

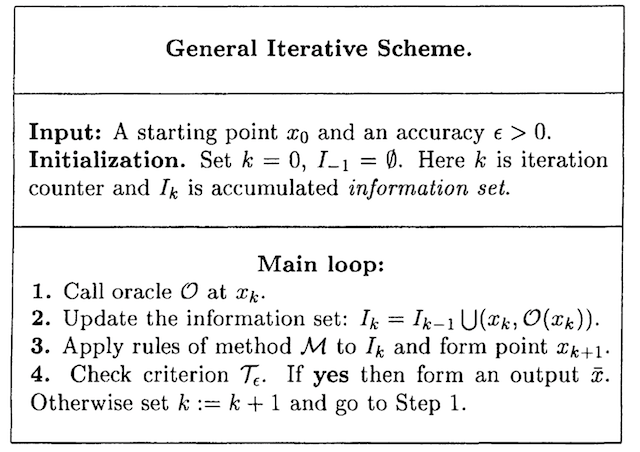

General Iterative Scheme

Local black box

- The only information available for the numerical scheme is the answer of the oracle

- The orical is local: A small variation of the problem far enough from the test point $x$ does not change the answer at $x$

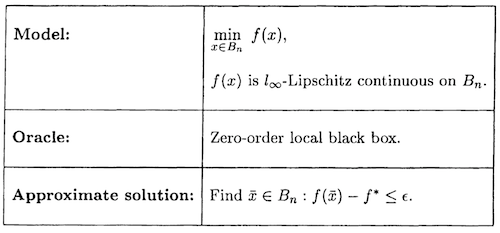

Complexity bounds for global optimization

Consider the class of problems $\mathcal{C}$, $\mathbb{R}^n$ equiped with $l_\infty$-norm, $B_n = \{ x \in \mathbb{R}^n | 0 \leq x^{(i)} \leq 1, i = 1, \ldots, m \}$,

Then it is proved that the uniform grid method is optimal for $\mathcal{C}$, which has a lower bound $(\left \lfloor \frac{L}{2\epsilon} \right \rfloor)^n$. Then for $L=2, n=10, \epsilon = 0.01$ it would take $10^{20}$ calls of oracle (0-order), at least 31, 250, 000 years.

The lower bounds

- To achieve the stopping criterion, for the worst problem (specific to the method), how many calls of oracles are needed for the method.

- Based on black box concept

- Valid for all reasonable iteative schemes, thus provide us a lower estimate for analytical complexity on the problem class

- Very often employ the idea of resisting oracle. A resisting oracle tries to create a worst problem for each particular method.

The upper complexity

- How many calls of oracles can assure the stopping criterion is achieved, for any problem of the problem class.

- For example, for the Lipschitz continuous functions on $B_n$, the uniform grid method has upper bound $(\left \lfloor \frac{L}{2\epsilon} \right \rfloor +2)^n$.

The optimal method

- The lower and upper bounds coincide up to a constant multiplicative factor

- if $\epsilon = O(\frac{L}{n})$, the uniform grid method is an optimal method for $\mathcal{C}$

Misc: Classes of differentiable functions

Let $Q \subset \mathbb{R}^n$, we denote $f \in C_L^{k, p}(Q)$ if

- $f$ is k times continuously differentiable on $Q$

- Its pth derivative is Lipschitz continuous on $Q$ with constant $L$

Intuitively, the Lipschitz continuity limits how fast the functions can change